Introduction

Cloud computing has continuously evolved, reshaping the way applications are designed, developed, and deployed. Among the latest innovations, serverless architecture stands out, promising developers more agility, scalability, and cost-efficiency. But is serverless truly the future of cloud computing?

Let’s delve deeper into serverless architecture, understand its core concepts, benefits, and potential challenges to determine its future role in the cloud landscape.

What is Serverless Architecture?

Serverless architecture refers to a cloud-native development model that enables developers to build and run applications without managing the underlying infrastructure. Despite the term “serverless,” servers are still involved; however, they are abstracted away from developers.

In serverless models, providers like AWS Lambda, Google Cloud Functions, and Azure Functions manage servers, scaling dynamically based on demand and charging only for actual usage.

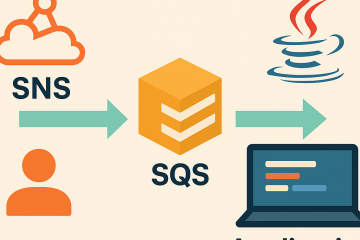

How Does Serverless Work?

When a serverless application is triggered, the cloud provider dynamically allocates resources, executes the code, and manages the infrastructure. Developers only need to upload their code and specify the event triggers, leaving infrastructure concerns entirely to the cloud provider.

Key Components:

- Functions as a Service (FaaS): Executes backend logic triggered by events.

- Backend as a Service (BaaS): Manages database, storage, authentication, and other backend functionalities.

Advantages of Serverless Architecture

1. Cost Efficiency

Serverless computing charges you only for the time and resources your code consumes. No costs are incurred when applications are idle, significantly reducing operational expenses.

2. Automatic Scaling

Serverless automatically scales your application up or down in response to demand, providing seamless elasticity without manual intervention.

3. Faster Deployment

With no infrastructure to manage, teams can rapidly deploy and iterate applications, significantly reducing time-to-market.

4. Enhanced Developer Productivity

Developers can concentrate on core application logic rather than infrastructure management, boosting overall productivity.

Potential Challenges and Limitations

While serverless offers numerous advantages, it’s important to understand its challenges:

1. Cold Start Latency

Initial function execution may experience latency, known as “cold starts,” impacting user experience for latency-sensitive applications.

2. Limited Control

Abstracted infrastructure limits visibility and control, potentially complicating debugging and performance optimization.

3. Vendor Lock-in

Serverless architectures are often provider-specific, leading to potential vendor lock-in risks.

Real-world Use Cases

Serverless architecture is ideal for specific scenarios such as:

- Web applications and APIs

- Real-time data processing

- Event-driven applications

- Microservices

- Backend operations like data transformation and automation workflows

Is Serverless the Future?

The answer hinges on the context. Serverless certainly has transformative potential for applications requiring dynamic scalability, rapid development cycles, and reduced management overhead. However, traditional infrastructure or containerized approaches may still be optimal for applications with specific performance or compliance needs.

Considering continuous improvements in latency, developer tools, and interoperability, serverless architecture is likely to gain broader adoption, becoming a crucial component in cloud computing.

Conclusion

Serverless architecture undeniably represents a significant advancement in cloud computing, offering impressive benefits around scalability, cost-efficiency, and agility. While not universally optimal, its growing maturity indicates that serverless will indeed play a substantial role in the future of cloud computing.

0 Comments