As modern systems continue to embrace microservices, public APIs, and high-volume traffic, controlling how consumers access APIs becomes critical. Without proper rate limiting, even a single misbehaving client can overwhelm your application—leading to degraded performance, increased latency, and potential downtime.

Rate limiting and throttling safeguard your APIs by controlling the number of requests a client can make within a specific time window. In Spring Boot, one of the most effective and developer-friendly libraries for this purpose is Bucket4j.

This guide provides a clear, practical look at implementing API rate limiting using Bucket4j, covering architectural considerations, token bucket mechanics, real-world examples, and integration patterns for both monolithic and distributed systems.

Understanding Rate Limiting & Throttling

Rate limiting ensures clients do not exceed predefined request quotas. It protects applications from:

-

Traffic spikes

-

Abuse or brute-force attacks

-

Over-consumption of resources

-

API misuse

-

Out-of-memory and thread starvation crashes

Rate Limiting vs. Throttling

| Concept | Meaning |

|---|---|

| Rate Limiting | Restricts the number of requests allowed in a given time frame. |

| Throttling | Delays or slows down requests when rate limits are approached or exceeded. |

Both mechanisms prevent overload and ensure fair usage.

Why Bucket4j?

Bucket4j is a Java-native library that implements the Token Bucket Algorithm, a widely used method for managing request consumption.

Key Features of Bucket4j

-

Lightweight and high-performance

-

Millisecond-level precision

-

Built-in support for distributed caching (Hazelcast, Redis, Infinispan)

-

Supports multiple bandwidth limits

-

Thread-safe and production-ready

How the Token Bucket Algorithm Works

A bucket contains a predefined number of tokens.

Each incoming request consumes one token.

-

Tokens are refilled at a fixed rate.

-

If the bucket is empty → the request is denied or delayed.

-

This ensures predictable, constant enforcement of rate limits.

Example:

Implementing Bucket4j in Spring Boot

Step 1: Add Dependency

Maven

(Optional Redis/Hazelcast integration available.)

Step 2: Create a Rate Limiting Filter

A common implementation is applying rate limiting per API path or per IP.

Step 3: Register Filter in Spring Boot

Applying Multiple Rate Limits (Optional)

This enforces layered protection.

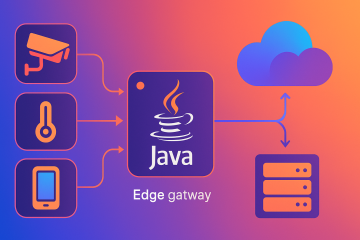

Distributed Rate Limiting with Redis

For microservices running multiple pods/instances, in-memory buckets are insufficient. Use Redis to share bucket state.

Add Redis dependency:

Create Redis-backed bucket:

This ensures consistent throttling across the cluster.

Best Practices for API Rate Limiting

1. Choose the Right Limit Strategy

-

Per IP

-

Per user

-

Per API key

-

Per tenant (SaaS)

2. Monitor Rate Limit Metrics

Use:

-

Spring Actuator

-

Grafana

3. Communicate Limits to Clients

Send headers such as:

4. Implement Graceful Degradation

Return:

-

429 Too Many Requests

-

Retry-After seconds

5. Combine Rate Limiting with Security Controls

Use along with:

-

API keys

-

WAF rules

Conclusion

Rate limiting and throttling are essential components of a robust API strategy. With Bucket4j, Spring Boot offers an elegant and high-performance way to manage traffic, protect resources, and ensure fair usage across clients.

Whether your application runs on a single node or across a distributed Kubernetes cluster, Bucket4j provides the flexibility and precision needed to maintain system stability and protect your APIs from misuse.

References (Official)

-

https://docs.spring.io/spring-boot/docs/current/reference/htmlsingle/

-

https://developer.mozilla.org/en-US/docs/Web/HTTP/Status/429

0 Comments