In microservices architecture, services often evolve independently. Over time, this leads to changes in data contracts—commonly referred to as schema evolution. If not handled carefully, even minor schema changes can break inter-service communication, leading to data loss, runtime errors, or system outages.

This article explores how to safely manage schema evolution across microservices, ensuring backward and forward compatibility using well-established patterns and tools.

Understanding the Problem

Let’s say Service A sends a JSON payload to Service B. At version 1, the payload looks like:

jsonCopyEdit{

"name": "Alice",

"email": "alice@example.com"

}

In version 2, Service A adds a new field:

jsonCopyEdit{

"name": "Alice",

"email": "alice@example.com",

"birthday": "1990-01-01"

}

If Service B isn’t designed to ignore unknown fields or parse optional ones, this evolution can cause failures.

Core Challenges in Schema Evolution

- Tight Coupling: Changes in one service force changes in others.

- Data Loss: Older clients may not understand or persist new fields.

- Deserialization Failures: Strict deserializers may reject unknown fields.

- Versioning Confusion: Difficult to know which version is in use where.

Best Practices for Schema Evolution

1. Design for Forward and Backward Compatibility

A schema change should neither break existing clients (backward compatible) nor prevent future clients from working with older versions (forward compatible).

Guidelines:

- Add, but don’t remove or rename fields.

- Never change the data type of an existing field.

- Mark new fields as optional.

Reference: Protocol Buffers Versioning Guide

2. Use Format-Aware Serializers

Leverage formats that support schema evolution inherently.

Common Choices:

- Protocol Buffers (Protobuf) – Compact, type-safe, and evolution-aware.

- Apache Avro – Popular with Kafka, schema registry support.

- JSON with tolerant deserializers – Use libraries like Jackson with

@JsonIgnoreProperties(ignoreUnknown = true).

Example (Jackson):

javaCopyEdit@JsonIgnoreProperties(ignoreUnknown = true)

public class User {

private String name;

private String email;

}

3. Implement Schema Versioning

Add a version field to payloads, headers, or topic names.

jsonCopyEdit{

"version": "v2",

"name": "Alice",

"email": "alice@example.com",

"birthday": "1990-01-01"

}

Then route based on version or use version-aware consumers.

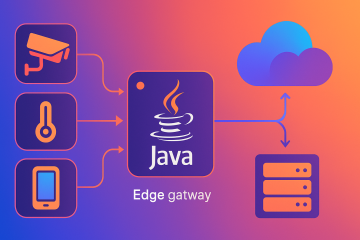

4. Use API Gateways for Transformation

If your consumers expect old payloads, an API gateway can mediate between versions, transforming requests or responses on-the-fly.

- Use tools like Kong, Spring Cloud Gateway, or AWS API Gateway to handle transformations.

5. Schema Registry in Event-Driven Systems

For Kafka or other message queues, use a schema registry (like Confluent Schema Registry) to manage compatibility checks during publishing.

This helps you enforce:

- BACKWARD compatibility: New schema must read old data.

- FORWARD compatibility: Old schema must read new data.

- FULL compatibility: Both directions must work.

6. Contract Testing

Use tools like:

- Pact: For consumer-driven contract testing.

- Spring Cloud Contract: For generating test stubs and ensuring API compatibility.

These tools ensure that schema expectations are synchronized between producers and consumers.

7. Deprecate Gracefully

Mark old fields as deprecated in documentation or with annotations (for Avro or Protobuf). Give consumers time to migrate before removal.

Example in Protobuf:

protoCopyEditstring legacy_field = 4 [deprecated = true];

Real-World Use Case: Schema Evolution with Kafka and Avro

In a distributed e-commerce system:

- Order Service publishes order events in Avro.

- Notification Service consumes these events.

When a new field deliveryEstimate is added:

- The schema registry ensures the new schema is backward compatible.

- The consumer is designed to ignore unknown fields.

- After testing and rollout, the Notification Service is upgraded to use the new field.

No downtime, no broken services.

Summary

Schema evolution is a reality in any scalable system. But with proactive design, modern serialization formats, and rigorous contract testing, it’s entirely manageable.

By treating schemas as contracts and embracing version-aware design, teams can evolve independently and deliver features without fear of breaking integrations.

0 Comments